Migrate from Amplitude to PostHog

Contents

Prior to starting a historical data migration, ensure you do the following:

- Create a project on our US or EU Cloud.

- Sign up to a paid product analytics plan on the billing page (historic imports are free but this unlocks the necessary features).

- Set the

historical_migrationoption totruewhen capturing events in the migration. This is automated if you are running a managed migration.

✨ Use our managed migration instead: We recently added a new managed migration for Amplitude. It automatically exports data from Amplitude, transforms it into PostHog's event schema, and imports it into your PostHog project. Get started with it in managed migrations in-app or learn more in the managed migrations docs.

Migrating from Amplitude is a two step process:

Export your data from Amplitude using the organization settings export, Amplitude Export API, or the S3 export.

Import data into PostHog using PostHog's Python SDK or

batchAPI with thehistorical_migrationoption set totrue. Other libraries don't support historical migrations yet.

Want us to take care of the migration for you? Our forward-deployed engineers can get you up and running in super quick time.

Exporting data from Amplitude

There are three ways to export data from Amplitude.

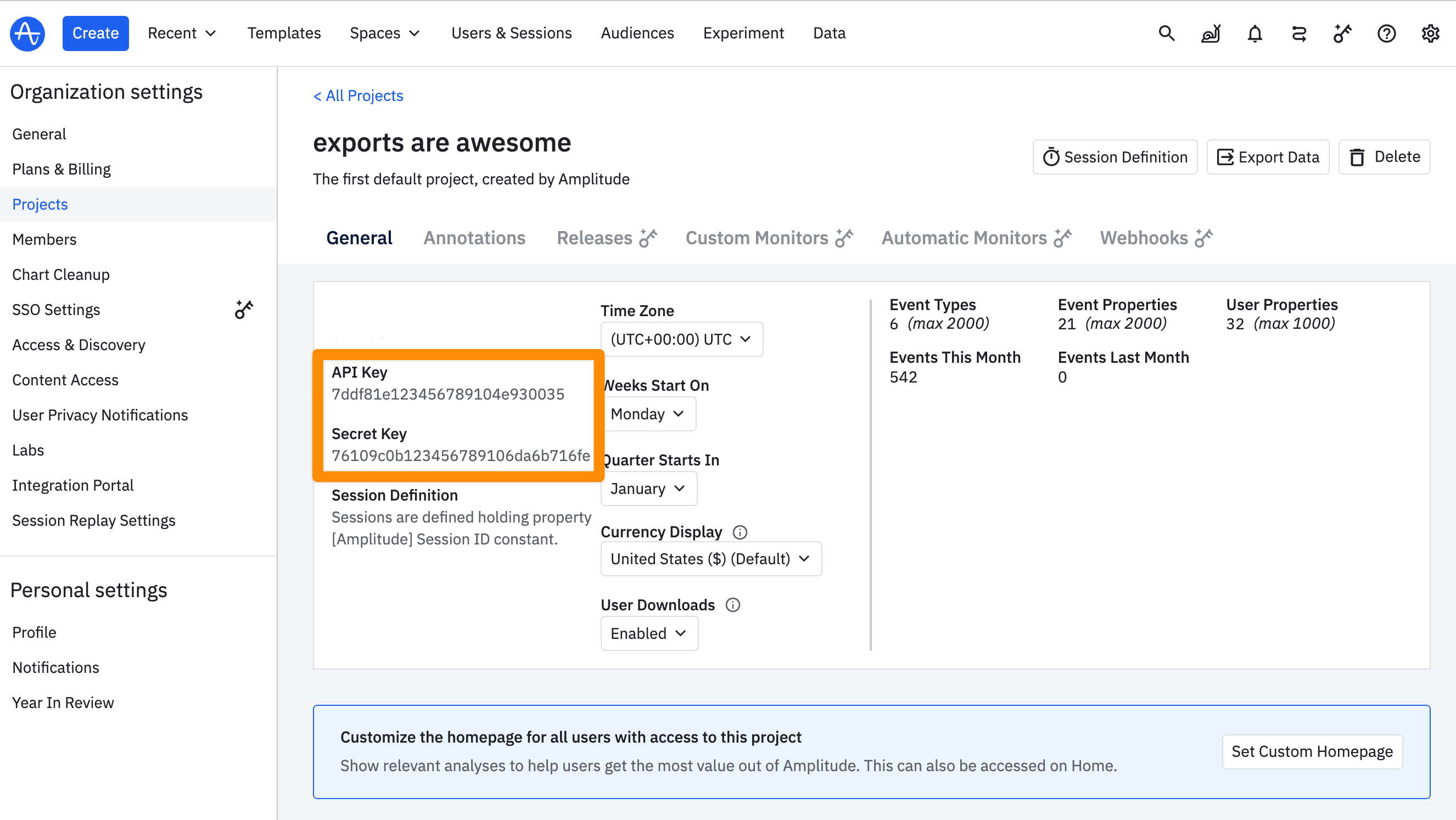

1. Organization settings export

The simplest way is to go to your project in your organization settings and click the Export Data button.

2. Export API

To export data using Amplitude's Export API, start by getting your API and secret key for your project from your organization settings.

You can then use these in a request to get the data like this:

3. S3 export

If your data exceeds Amplitude's export size limitation, you can use their S3 export.

Importing Amplitude data into PostHog

Amplitude exports data in a zipped archive of JSON files. To get this data into PostHog, you need to:

- Unzip and read the data

- Convert the events from Amplitude's schema to PostHog's

- Capture the events into PostHog using the

historical_migrationoption - Alias device IDs to user IDs

Steps 1, 3, and 4 are relatively straightforward, but step 2 requires more explanation.

Converting Amplitude events

Although Amplitude events have a similar structure, you need to convert them to PostHog's schema. Many events and properties have different keys. For example, autocaptured events and properties in PostHog often start with $.

You can see Amplitude's event structure in their Export API documentation and PostHog's autocapture event structure in our autocapture docs.

Some conversions needed include:

- Changing event names like

[Amplitude] Page Viewedto$pageview - Changing event property keys like

[Amplitude] Page Locationto$current_url - Translating

EMPTYvalues inuser_propertiestonull - Changing

event_timeto an ISO 8601 formattedtimestamp - Using

$setand$set_oncefor person properties

Converting the data ensures that it matches the data PostHog captures and can be integrated in analysis.

Example Amplitude migration script

Below is a script that gets Amplitude data from the export folder, unzips it, converts the data to PostHog's schema, and then captures it in PostHog. It gives you a start, but likely needs to be modified to fit your infrastructure and data structure.

This script may need modification depending on the structure of your Amplitude data, but it gives you a start.

Why am I seeing "analytics-python queue is full"? The default queue size for ingesting is 10,000. If you are importing more than 10,000 events, call

posthog.flush()every 5000 messages to block the thread until the queue empties out.

Why are my event and DAU count lower in PostHog than Amplitude? PostHog blocks bot traffic by default, while Amplitude doesn't. You can see a full list of the bots PostHog blocks in our docs.

Aliasing device IDs to user IDs

In addition to capturing the events, we want to combine anonymous and identified users. For Amplitude, events rely on the device ID before identification and the user ID after:

| Event | User ID | Device ID |

|---|---|---|

| Application installed | null | 551dc114-7604-430c-a42f-cf81a3059d2b |

| Login | 123 | 551dc114-7604-430c-a42f-cf81a3059d2b |

| Purchase | 123 | 551dc114-7604-430c-a42f-cf81a3059d2b |

We want to attribute "Application installed" to the user with ID 123, so we need to also call alias with both the device ID and user ID:

Since you only need to do this once per user, ideally you'd store a record (e.g. a SQL table) of which users you'd already sent to PostHog, so that you don't end up sending the same events multiple times.