API queries

Contents

API queries enable you to query your data in PostHog. This is useful for:

- Building embedded analytics.

- Pulling aggregated PostHog data into your own or other apps.

When should you not use API queries?

- When you want to export large amounts of data. Use batch exports instead.

- When you want to send data to destinations like Slack or webhooks immediately. Use real-time destinations instead.

- If you need data from long-running queries with high memory usage at regular intervals. In this case, you should use materialized views with a schedule instead. You can query these through SQL and get faster results.

Prerequisites

Using API queries requires:

- A PostHog project and its project ID which you can get from your project settings.

- A personal API key for your project with the Query Read permission. You can create this in your user settings.

Creating a query

To create a query, you make a POST request to the /api/projects/:project_id/query/ endpoint. The body of the request should be a JSON object with a query property with a kind and query property.

By default, API queries return up to 100 rows. If you specify your own LIMIT value, you can return up to 50k rows per query before we suggest paginating.

For example, to create a query that gets events where the $current_url contains blog, you use kind: HogQLQuery and SQL like:

This is also useful for querying non-event data like persons, data warehouse, session replay metadata, and more. For example, to get a list of all people with the email property:

Every query you run is logged in the query_log table along with details like duration, read bytes, read rows, and more. The name parameter you provide appears in this log, making it easier to identify and analyze your queries.

Writing performant queries

When writing custom queries, the burden of performance falls onto you. PostHog handles performance for queries we own (for example, in product analytics insights and experiments, etc.), but because performance depends on how queries are structured and written, we can't optimize them for you. Large data sets particularly require extra careful attention to performance.

Here is some advice for making sure your queries are quick and don't read over too much data (which can increase costs):

1. Use shorter time ranges

You should almost always include a time range in your queries, and the shorter the better. There are a variety of SQL features to help you do this including now(), INTERVAL, and dateDiff. See more about these in our SQL docs.

2. Materialize a view for the data you need

The data warehouse enables you to save and materialize views of your data. This means that the view is precomputed, which can significantly improve query performance.

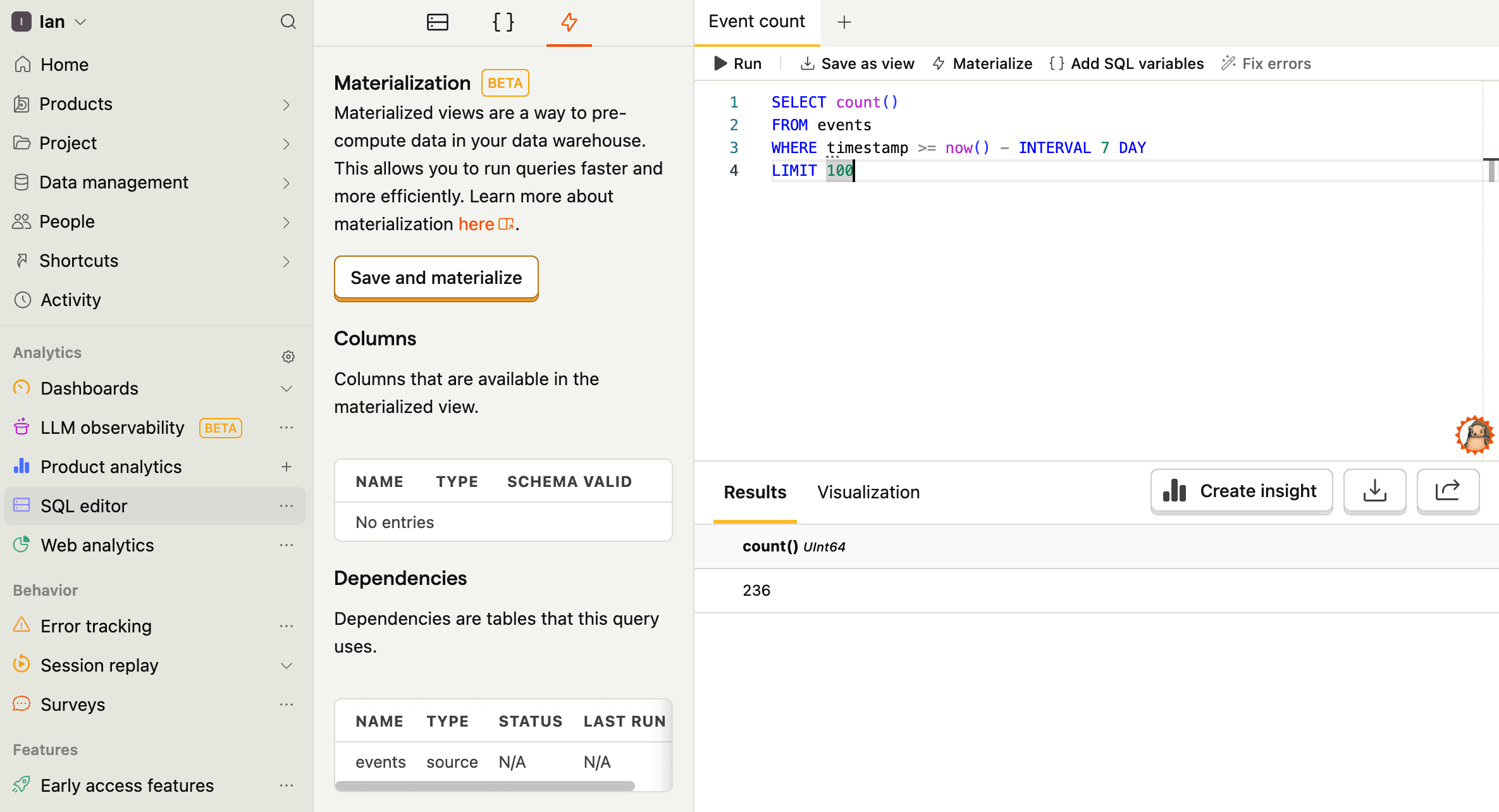

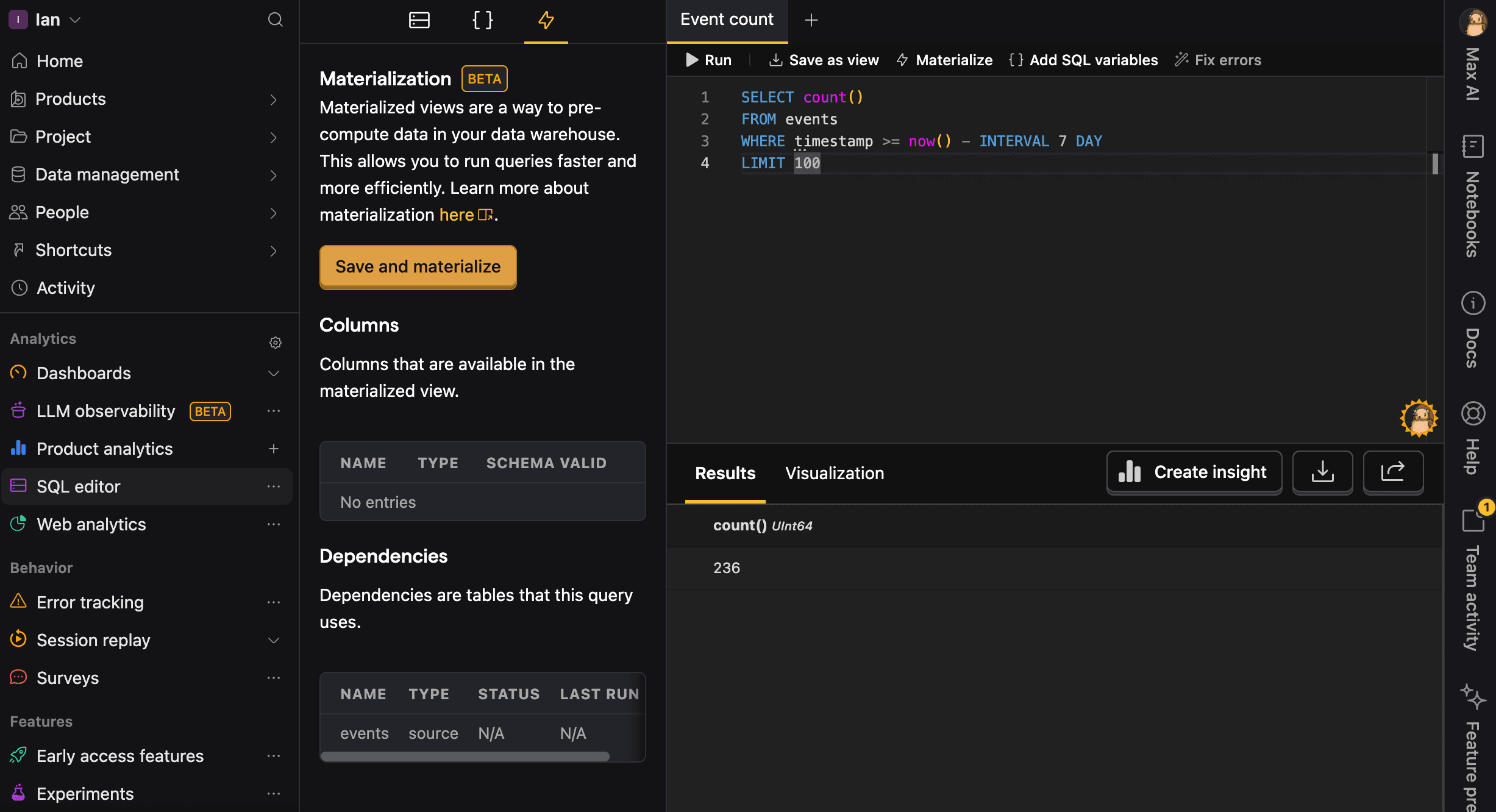

To do this, write your query in the SQL editor, click Materialize, then Save and materialize, and give it a name without spaces (I chose mat_event_count). You can also schedule to update the view at a specific interval.

Once done, you can query the view like any other table.

3. Don't scan the same table multiple times

Reading a large table like events or persons more than once in the same query multiplies the work PostHog has to do (more I/O, more CPU, more memory). For example, this query is inefficient:

Instead, pull the rows you need once and save it as a materialized view. You can then query from that materialized view in all the other steps.

Start by saving this materialized view, e.g. as base_events:

You can then query from base_events in your main query, which avoids scanning the raw events table multiple times:

4. Name your queries for easier debugging

Always provide a meaningful name parameter for your queries. This helps you:

- Identify slow or problematic queries in the

query_logtable - Analyze query performance patterns over time

- Debug issues more efficiently

- Track resource usage by query type

Good query names are descriptive and include the purpose:

daily_active_users_last_7_daysfunnel_signup_to_activationrevenue_by_country_monthly

Bad names are generic and vague:

query1testdata

5. Use timestamp-based pagination instead of OFFSET

When querying large datasets like events or query_log over multiple batches, avoid using OFFSET for pagination. Instead, use timestamp-based pagination, which is much more efficient and scales better.

❌ Inefficient approach using OFFSET:

✅ Efficient approach using timestamp pagination:

This approach is more efficient because:

- Constant performance: Each query executes in similar time regardless of how many rows you've already retrieved

- Index-friendly: Uses the timestamp index effectively for filtering

- Scalable: Performance doesn't degrade as you paginate through millions of rows

For geeks: OFFSET-based pagination gets progressively slower because the database must scan and skip all the offset rows for each query. With timestamp-based pagination, the database uses the timestamp index to directly jump to the right starting point, maintaining consistent performance across all pages.

6. Other SQL optimizations

Options 1-5 make the most difference, but other generic SQL optimizations work too. See our SQL docs for commands, useful functions, and more to help you with this.

Query parameters

Top level request parameters include:

query(required): Specifies what data to retrieve. This must include akindproperty that defines the query type.client_query_id(optional): A client-provided identifier for tracking the query.refresh(optional): Controls caching behavior and execution mode (sync vs async).filters_override(optional): Dashboard-specific filters to apply.variables_override(optional): Variable overrides for queries that support variables.name(optional): A descriptive name for the query to better identify it in thequery_logtable. We strongly recommend providing meaningful names for easier debugging and performance analysis.

Caching and execution modes

The refresh parameter controls the execution mode of the query. It can be one of the following values:

blocking(default): Executes synchronously unless fresh results exist in cacheasync: Executes asynchronously unless fresh results exist in cacheforce_blocking: Always executes synchronouslyforce_async: Always executes asynchronouslyforce_cache: Only returns cached results (never calculates)lazy_async: Use extended cache period before asynchronous calculationasync_except_on_cache_miss: Use cache but execute synchronously on cache miss

Tip: To cancel a running query, send a

DELETErequest to the/api/projects/:project_id/query/:query_id/endpoint.

Query types

The kind property in the query parameter can be one of the following values.

HogQLQuery: Queries using PostHog's version of SQL.EventsQuery: Raw event data retrievalTrendsQuery: Time-series trend analysisFunnelsQuery: Conversion funnel analysisRetentionQuery: User retention analysisPathsQuery: User journey path analysis

Beyond HogQLQuery, these are mostly used to power PostHog internally and are not useful for you, but you can see the frontend query schema for a complete list and more details.

Response structure

The response format depends on the query type, but all responses include:

results: The data returned by the queryis_cached(for cached responses): Indicates the result came from cachetimings(when available): Performance metrics for the query execution

Cached responses

API queries are cached by default. You can check if a response is cached by checking the is_cached property. Responses also contain cache-related details like:

cache_key: A unique identifier for the cached resultcache_target_age: The timestamp until which the cached result is considered validlast_refresh: When the data was last computednext_allowed_client_refresh: The earliest time when a client can request a fresh calculation

Asynchronous queries

For asynchronous queries (like ones with refresh: async), the initial response includes a query status with its completion status, query ID, start time, and more:

You can then poll the status by sending a GET request to the /api/projects/:project_id/query/:query_id/ endpoint.

Rate limits

API queries are limited at the project-level to:

- 2400 requests per hour

- 240 requests per minute

- 3 queries running concurrently

- 60 threads per query

- 10 seconds of max execution time

- applies to query execution time, not HTTP request duration

At this time, we are not offering higher limits than these, but you may wish to try our endpoints product which offers query customization and higher limits. Alternatively you may be able to use our batch exports product, to pull the data that you need from our events or persons tables on a faster cadence.

If the project's concurrency quota is exhausted, we put the query in queue and wait. The query may wait up to 30 seconds in a queue before executing, being canceled, or timing out.