How to monitor LlamaIndex apps with Langfuse and PostHog

Contents

LlamaIndex is a powerful framework for connecting LLMs with external data sources. By combining PostHog with Langfuse, an open source LLM analytics platform, you can easily monitor your LLM app.

In this tutorial, we show you how to set this up by walking you through a simple RAG chat app.

1. Set up the sample app

For this tutorial, we create an app that answers questions about how to care for hedgehogs. The first step is to vectorize a pdf on how to care for hedgehogs using Mistral's 8x22B model.

To do this, install all the necessary dependencies for the app:

Next, create a folder for the project as well as a python file called app.py:

Then, paste the following code into app.py to set up the app:

You need to replace <your_mistral_api_key> with your actual key. To do this, you first need to sign up for a Mistral account. Then subscribe to a free trial or billing plan, after which you’ll be able to generate an API key.

2. Set up monitoring with Langfuse

Next, we set up Langfuse to trace our model generations. Sign up for a free Langfuse account if you haven't already. Then create a new project and copy the API keys.

Paste the keys into the top of app.py and instantiate Langfuse:

Then we register Langfuse's LlamaIndexCallbackHandler in LlamaIndex's Settings.callback_manager just below the existing code where we import LlamaIndex.

3. Build RAG on the hedgehog pdf

First download the hedgehog care guide pdf by running the following command in your project directory:

Next, we load the pdf using the LlamaIndex SimpleDirectoryReader, create vector embeddings using VectorStoreIndex, and then convert it into a queryable engine that we can retrieve information from.

Finally, we can add code to query the engine and print the answer:

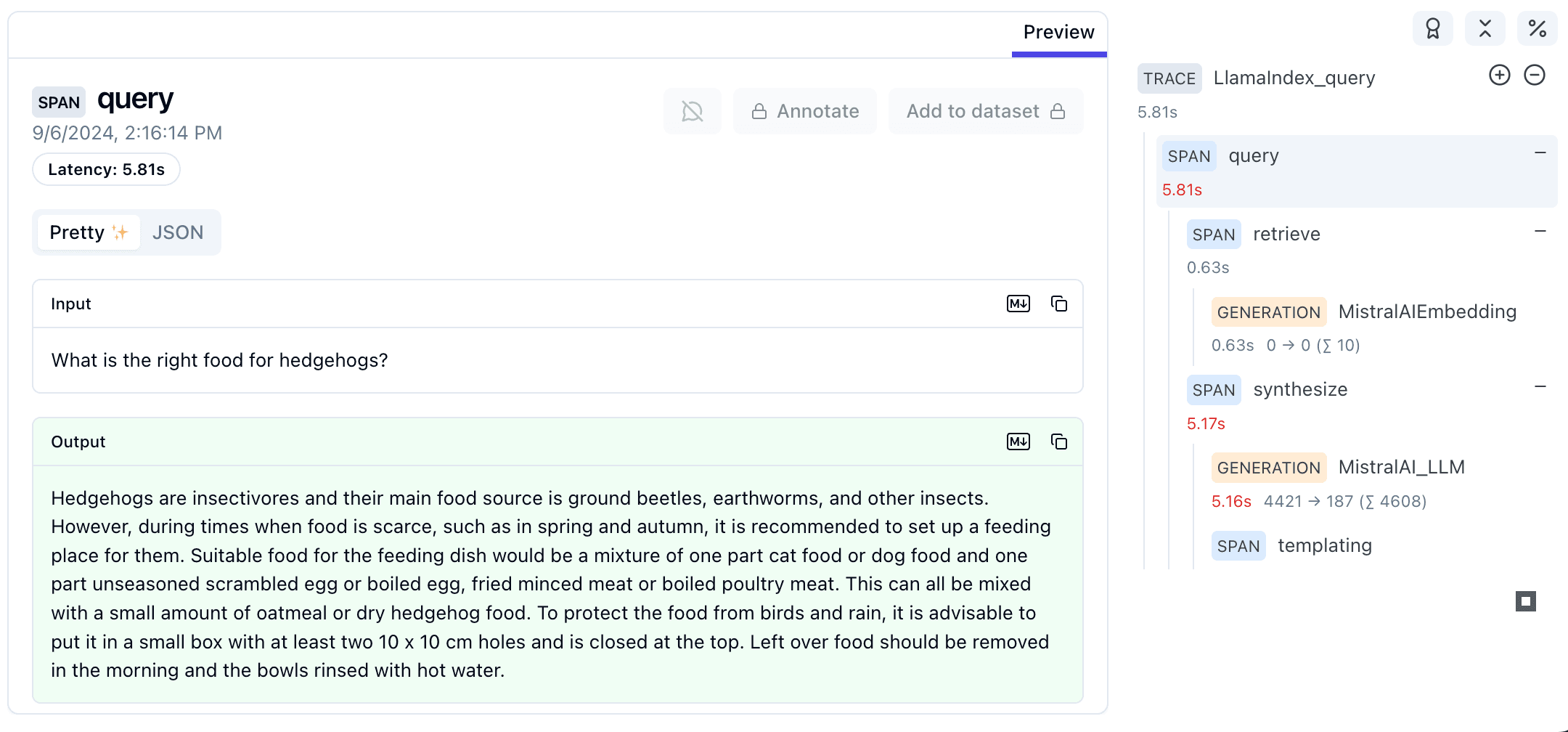

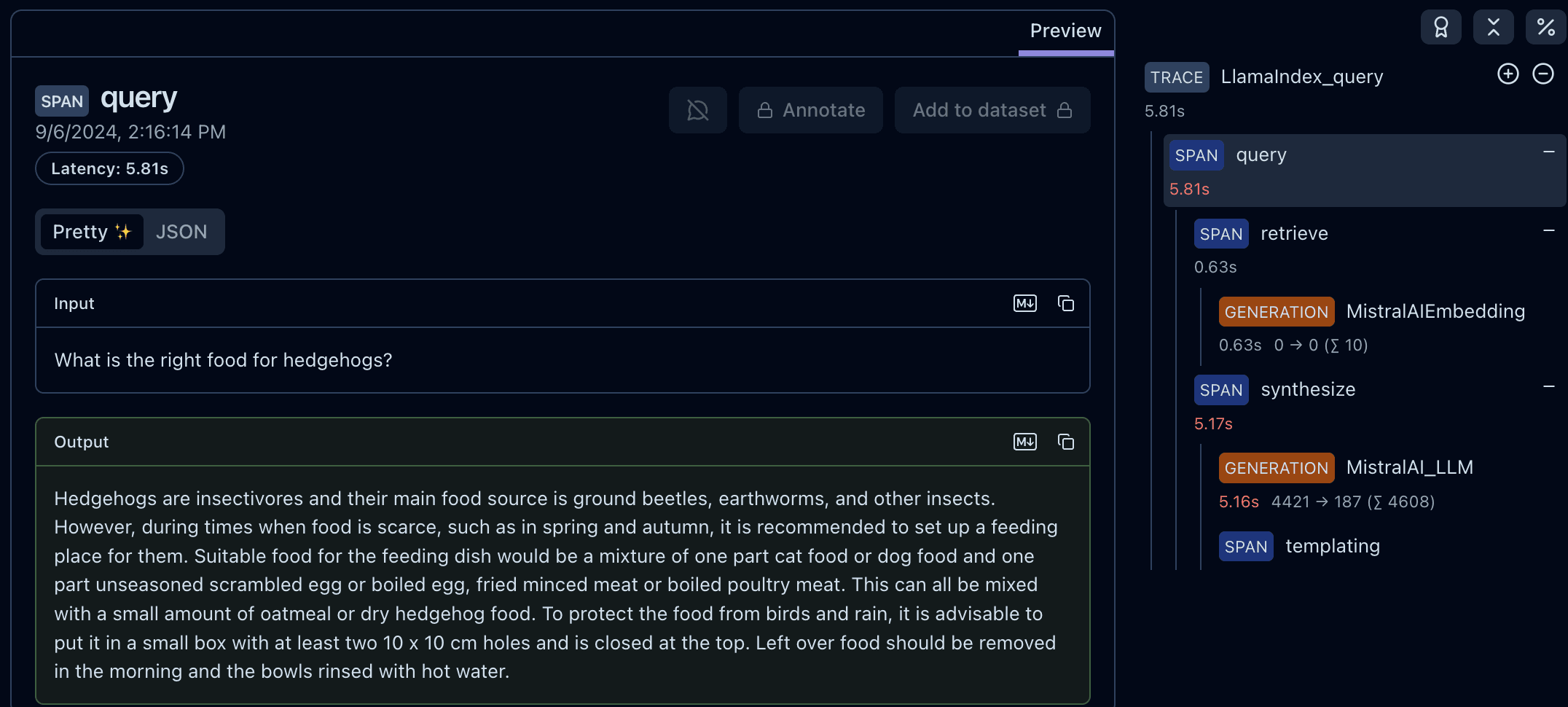

Test the app by running python app.py. You should see a response like the one below 🎉:

All steps of the LLM chain are now tracked in Langfuse and you can view them in your Langfuse dashboard.

4. Integrate Langfuse with PostHog

Next we connect Langfuse to PostHog so that you can combine your LLM trace data with your PostHog analytics. This enables you to answer product questions such as:

- What are my LLM costs by customer, model, and in total?

- How many of my users are interacting with my LLM features?

- Does interacting with LLM features correlate with other metrics (retention, usage, revenue, etc.)?

Here's how to connect Langfuse to PostHog:

- In your Langfuse dashboard, click on your project settings and then on Integrations.

- On the PostHog integration, click Configure and paste in your PostHog host and project token (you can find these in your PostHog project settings).

- Click Enabled and then Save.

Langfuse will now begin sending your LLM analytics to PostHog once a day.

5. Set up a PostHog dashboard

The last step is to set up a PostHog dashboard so that you can view your LLM insights. We've made this easy for you by creating a dashboard template. To create the dashboard template:

- Go the dashboard tab in PostHog.

- Click the New dashboard button in the top right.

- Select LLM metrics – Langfuse from the list of templates.

Further reading

Subscribe to our newsletter

Product for Engineers

Read by 100,000+ founders and builders

We'll share your email with Substack

PostHog is an all-in-one developer platform for building successful products. We provide product analytics, web analytics, session replay, error tracking, feature flags, experiments, surveys, LLM analytics, data warehouse, CDP, and an AI product assistant to help debug your code, ship features faster, and keep all your usage and customer data in one stack.