How to do A/A testing

Contents

An A/A test is the same as an A/B test except both groups receive the same code or components. Since both groups get identical functionality, the goal is to not see a statistical difference between the variants by the end of the experiment.

Teams run A/A tests to ensure their A/B test service, functionality, and implementation work as expected and provides accurate results. A/A tests are a way to discover broken parts of this process, and this tutorial shows you how to run one in PostHog.

Unlike other A/B testing tools, A/A tests aren’t needed to calculate baseline conversion and sample metrics in PostHog. You can calculate those using your product data, and they are automatically when you create an experiment.

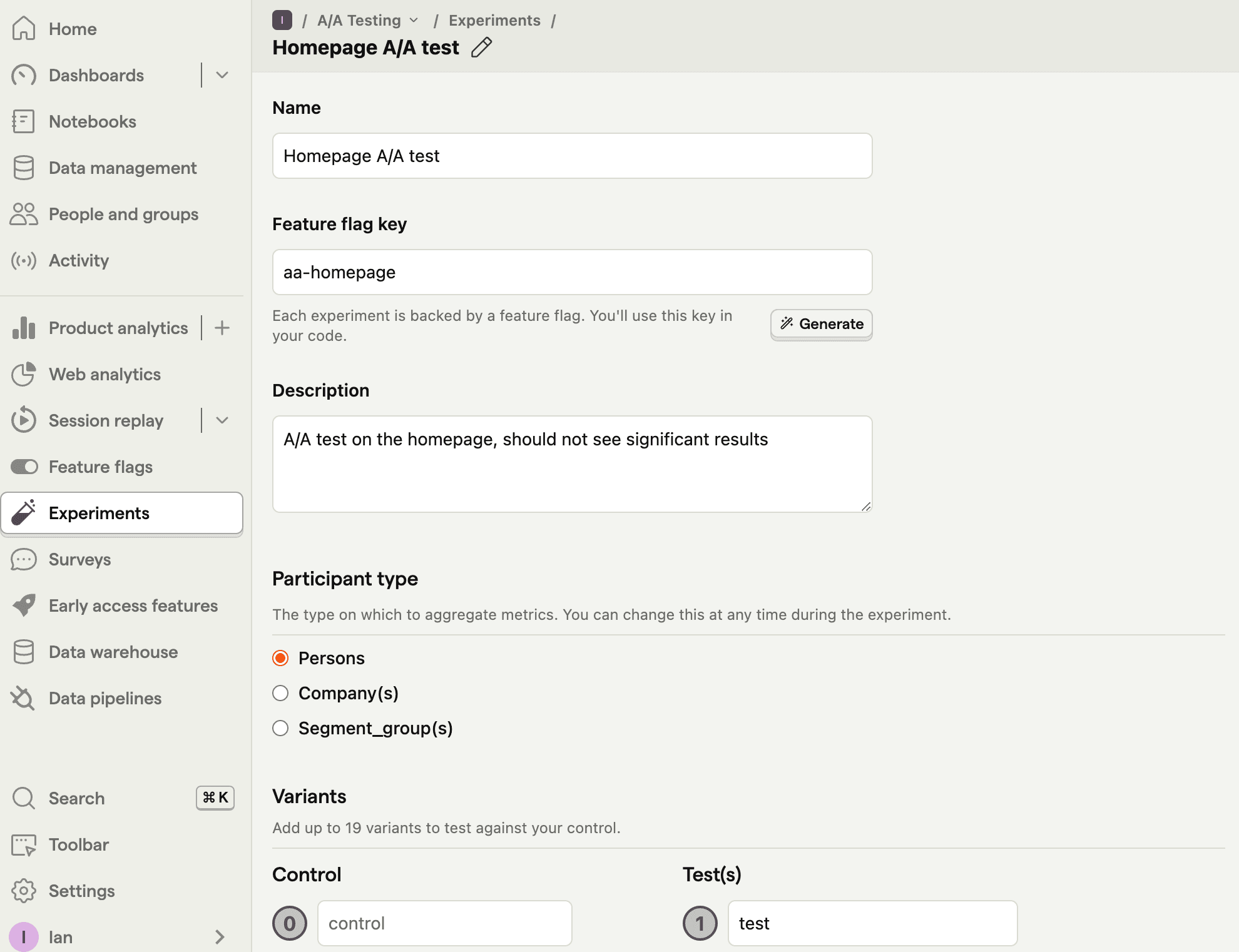

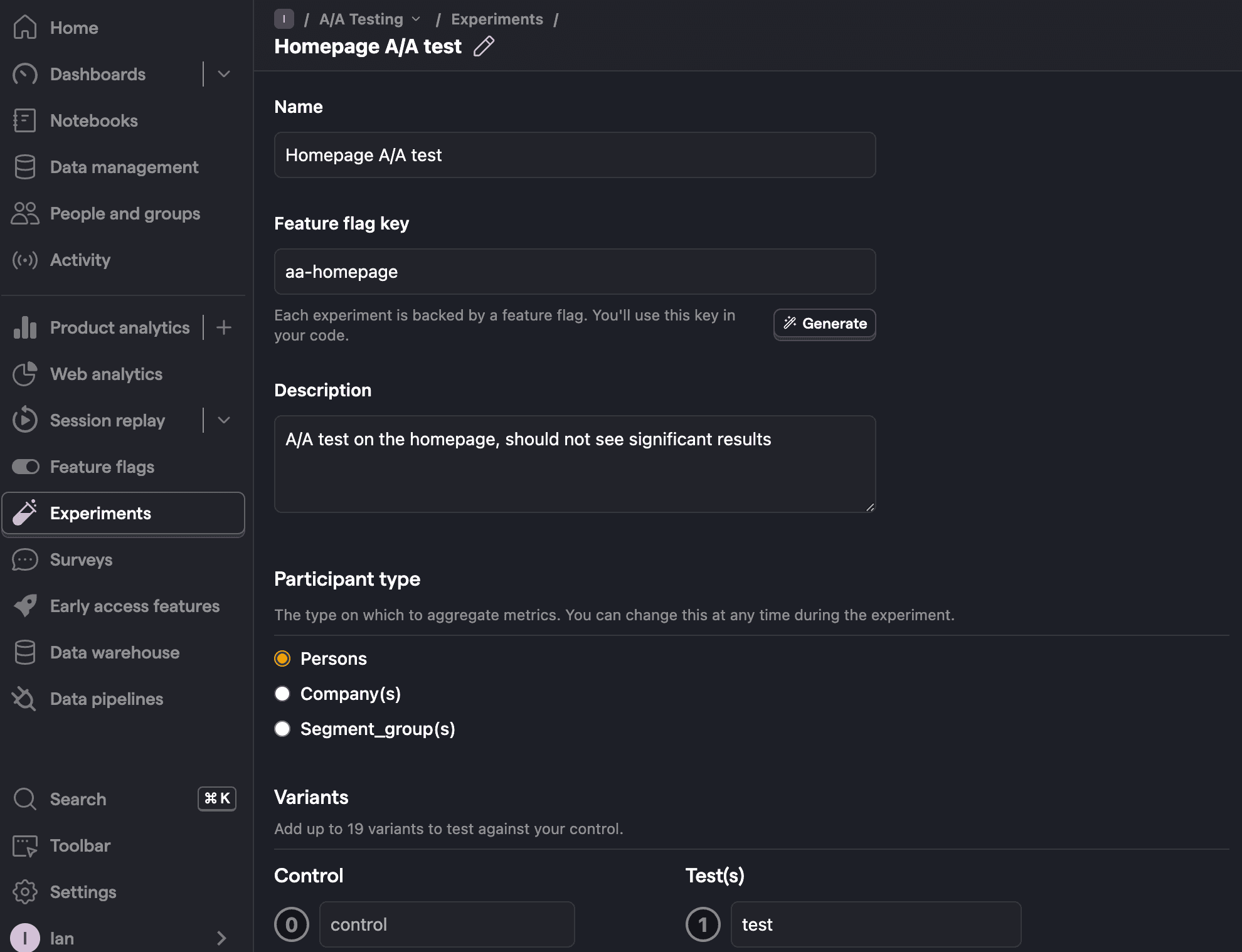

Creating an experiment

The first step in running an A/A test is creating an experiment. You do this going to the experiments tab and clicking the New experiment button. Set a name and flag key. Make sure to clarify in the name or description that it is an A/A test.

Once created, set a primary metric. If you use a trend, like total pageviews, make sure to use an equal variant split (which is the default). If you use a funnel, you can test non-equal variant splits like 25-75. To do this, edit the feature flag with the same key as your experiment, change the variant rollout, and press save.

Implementing your A/A test

It is critical to A/A testing that you treat it like a standard A/B test. This means implementing it like any A/B test. You must call the related feature flag, set up code dependent on it, and capture the relevant goal event(s).

After implementing, go back to your experiment and click "launch." Let the experiment run for a few days or weeks and monitor its metrics.

Handling A/A test results

After running your tests, you should see the similar results for all the variants. The results should not be statistically significant, since all variants received the same treatment.

If the results are statistically significant, something is wrong. Here are some areas to check:

Feature flag calls: For the specific flag, create a trend insight of unique users for

$feature_flag_calledevents and make sure they are equally split between the variants. If not, there might be a problem with how you are evaluating the feature flag.Watch session replays: filter session replays for

$feature_flag_calledevent with your flag or events where your experiment feature flag is active, look for differences between the variants. See: "How to use filters + session replays to understand user friction."Flag implementation: use the overrides (like

posthog.featureFlags.overrideFeatureFlags({ flags: {'aa-homepage': 'test'}})for each of the variants and check that the same code runs. Try accessing the code with different states (logged in vs out), browsers, and parameters.Consistently identify, set properties, and group users: if your experiment or goals depends on a user, property, or group filter, check that you are setting these values correctly before calling the flag. For example, you might not be setting a person property a flag relies on before flag evaluation.

If none of these work, raise a support ticket in-app.

A successful A/A test provides evidence that your experimentation process and service work correctly. It creates confidence in your future A/B test results. An "unsuccessful" A/A test uncovers important issues with your experimentation process which you can fix to improve it.

Further reading

- Testing frontend feature flags with React, Jest, and PostHog

- How to run experiments without feature flags

- 8 annoying A/B testing mistakes every engineer should know

Subscribe to our newsletter

Product for Engineers

Read by 100,000+ founders and builders

We'll share your email with Substack

PostHog is an all-in-one developer platform for building successful products. We provide product analytics, web analytics, session replay, error tracking, feature flags, experiments, surveys, LLM analytics, data warehouse, CDP, and an AI product assistant to help debug your code, ship features faster, and keep all your usage and customer data in one stack.