New experimentation engine

Contents

We've built a new experimentation engine to support more advanced features and improve the accuracy of experiment results. Here are some of the key features it includes:

- A single, consistent exposure criterion for the entire experiment

- Support for outlier handling (Winsorization)

- Configurable handling of users exposed to multiple variants (exclude or use first seen variant)

- Improved support for conversion windows and time-based metrics (formerly called trend metrics)

- A better running time calculator that uses your historical data to estimate experiment duration

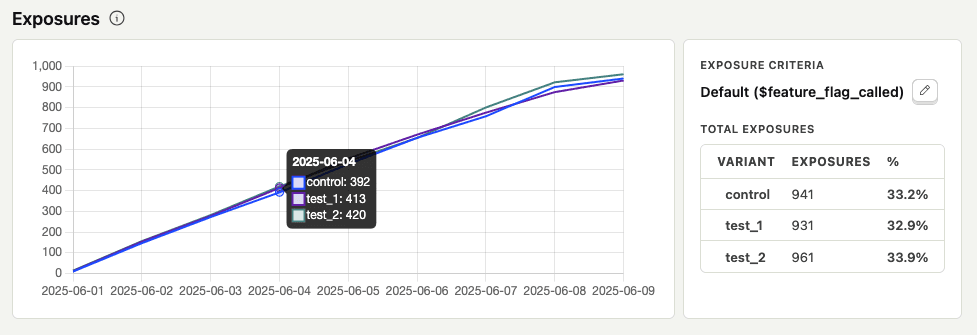

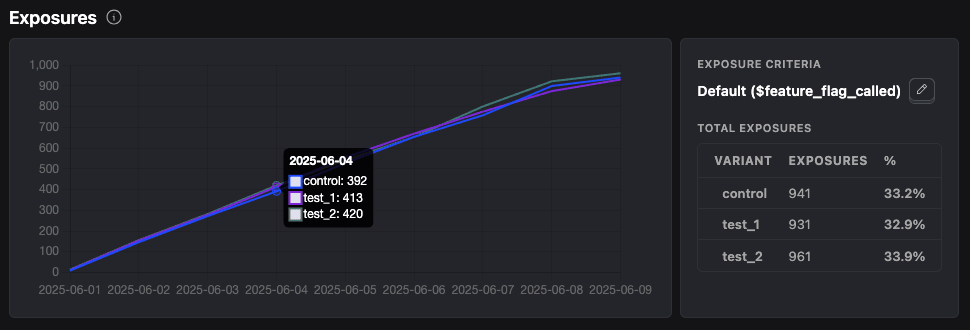

The user interface has also been updated. You'll now see a chart showing experiment exposures, as well as the total number of users in each variant. There's also a count of users who were exposed to more than one variant.

This is now the default engine for all new experiments.

Migrating to the new engine

If you'd like to migrate an old experiment to the new engine, simply create a new experiment using the same feature flag, add your metrics, and launch it. After launching, you can adjust the start date to match your original experiment.

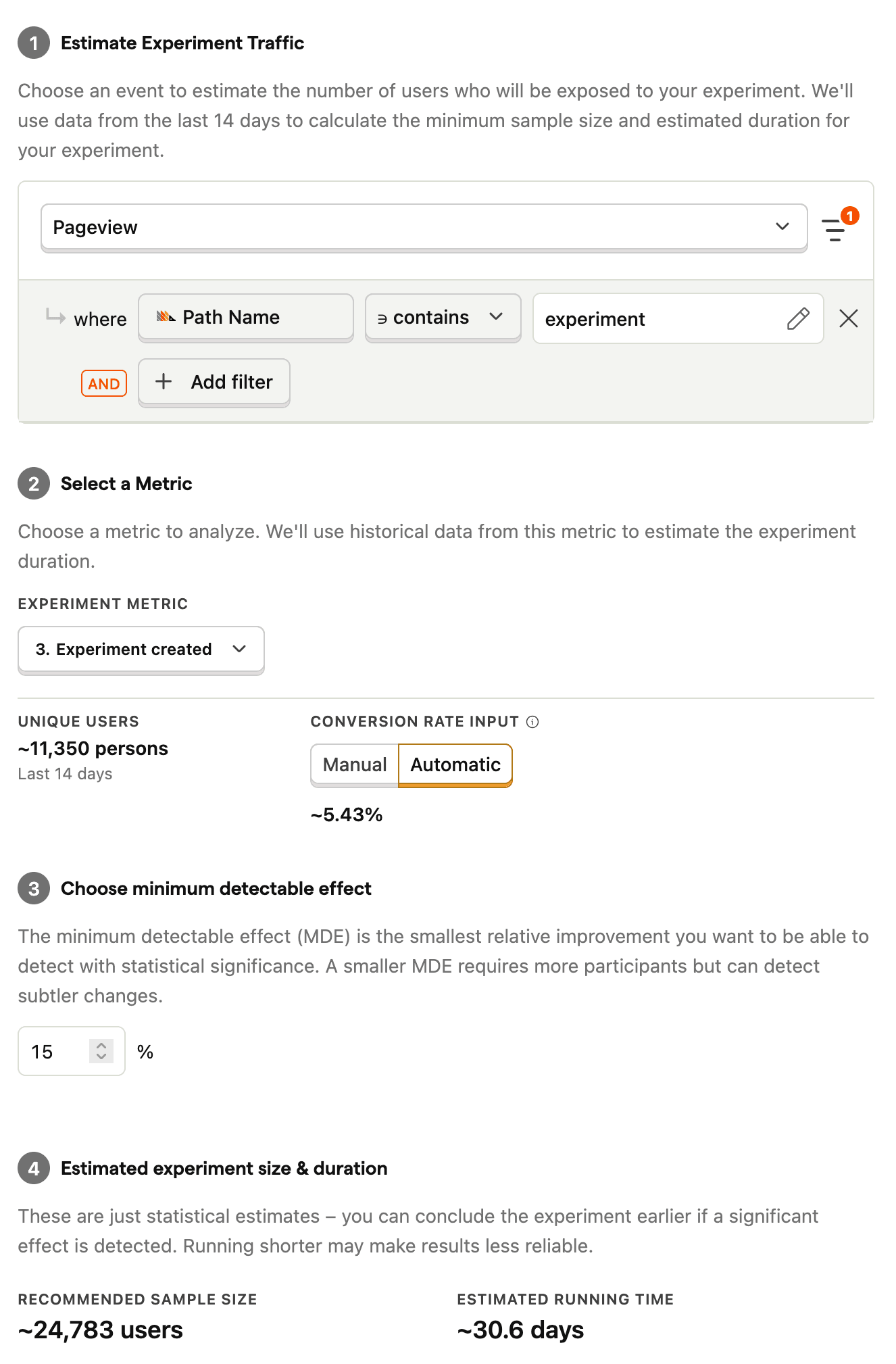

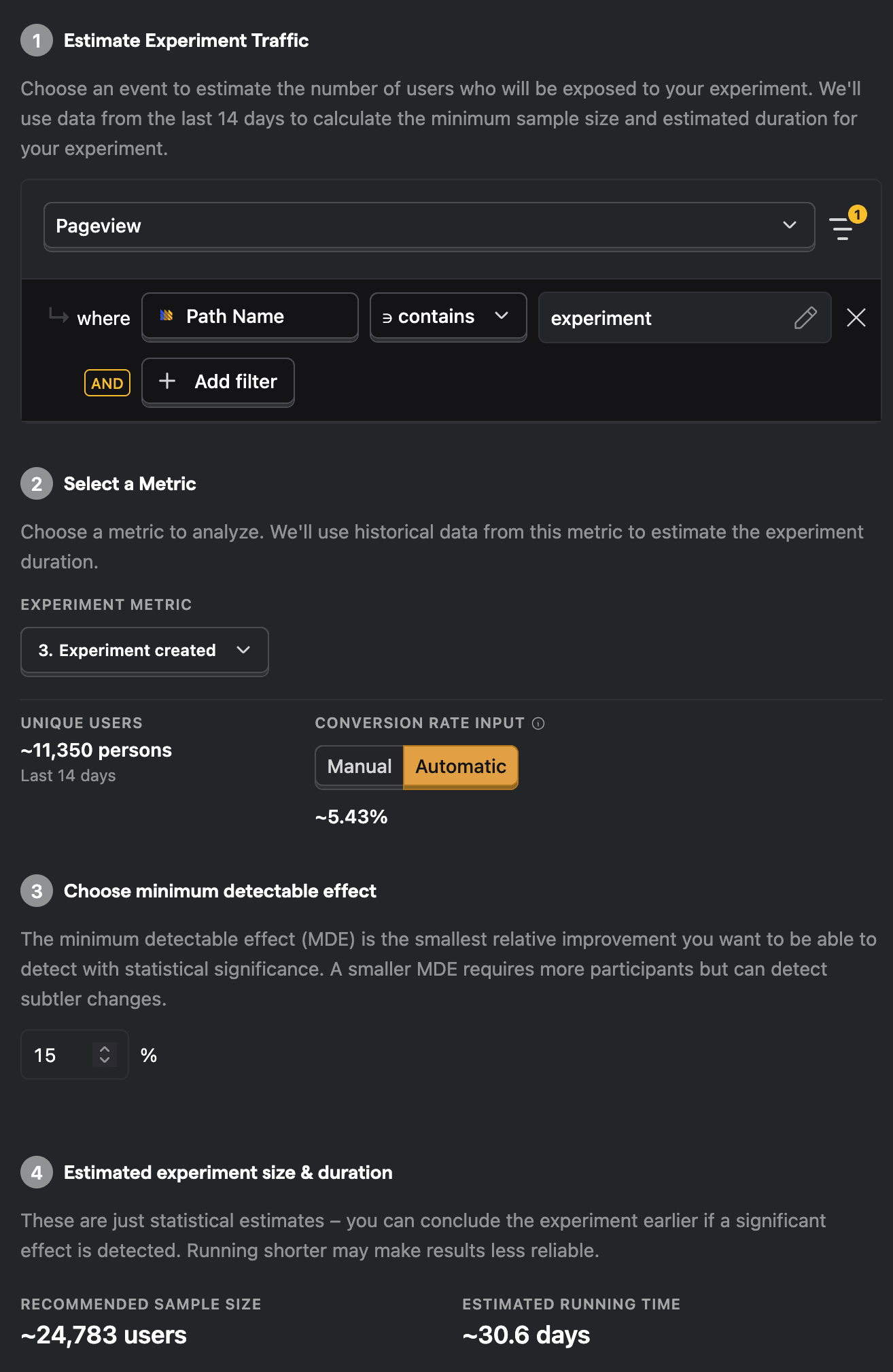

Running time calculator

The new running time calculator provides a more accurate estimate of how long your experiment will need to run. It walks you through a few steps:

Traffic estimation: Choose an event to estimate incoming traffic. For example, a pageview event with a specific path or URL filter.

Select metric: Pick one of your added metrics—ideally, your primary success metric—for estimating running time.

Minimum detectable effect: Set the minimum relative change you'd like to detect. Smaller changes require more data and longer run times. A common range is between 5% and 30%, depending on the impact you're trying to measure and your daily user volume.

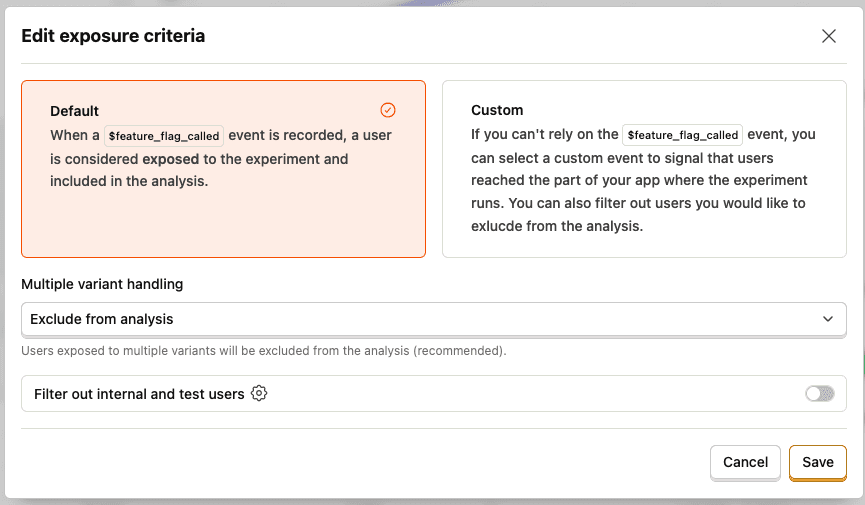

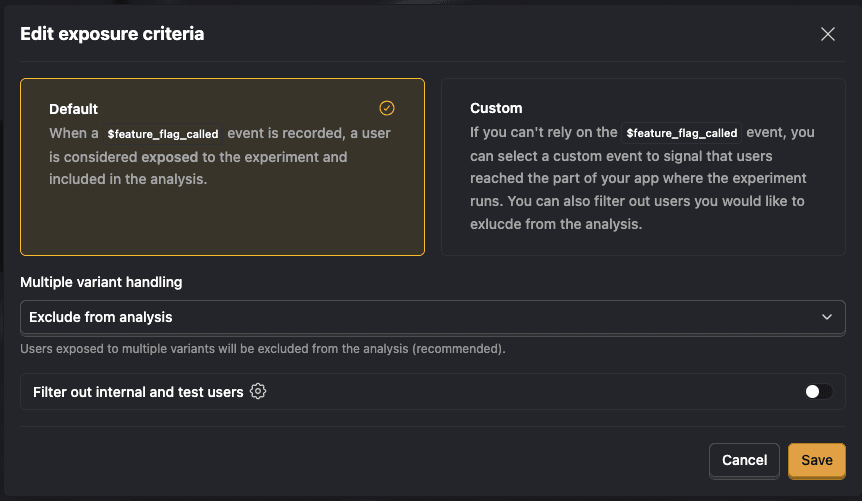

Experiment exposure

By default, a user is considered exposed when we record the $feature_flag_called event — meaning they reached the part of your product where the experiment runs. Only those users are included in the analysis. If the default $feature_flag_called event is not suitable for you, you can specify a different exposure event. We will then use the event property $feature/<flag-key>: <variant-key> to determine which variant a user is in.

The experiment view shows the unique-user count for each variant at the top.

Note: Metric events are only counted if they occur after a user's first exposure.

Users exposed to multiple variants

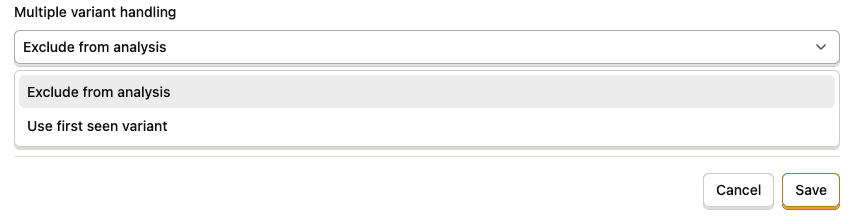

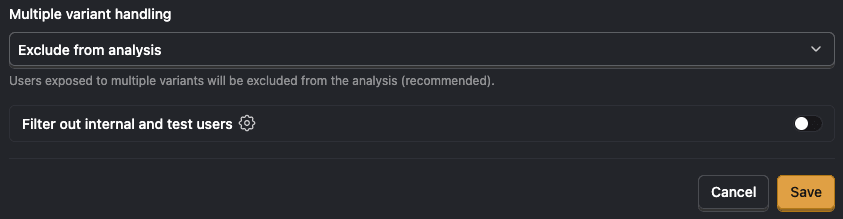

Sometimes, users may have been exposed to more than one variant. To ensure robust experiment results, the default behavior is to exclude them from the analysis. If you still would like to include them, you can change this behavior under "Edit exposure criteria":

Exclude (default): Exclude users exposed to multiple variants. This ensures clean results by only analyzing users who had a single, consistent experience. Excluded users are grouped under

$multiplein the exposure table.First seen variant: Include the users and attribute all metric events to the first variant they encountered. Only choose this option if you know what you are doing.

Configuring metrics

Funnel

This works similarly to funnels in product analytics. Use it to measure conversion rates. Currently, we only show results for the final step of the funnel, but we're working on supporting breakdowns by step soon.

When you add a funnel metric, we insert a placeholder for the experiment exposure in the preview. That's because using the actual exposure criteria as the first step would typically result in no data being shown. During evaluation, your chosen exposure event will serve as the actual first step. This means you can add funnels with just one step because exposure is implicitly treated as step one.

Mean

Use this to track metrics like total event count or summed values (e.g., revenue). Here's how it works:

- We aggregate the chosen value for each user using your selected method (count, sum, or average).

- We then compute the mean of those values for each variant.

- This mean is used to determine the statistical difference between variants.

For example:

- If you choose sum of revenue, we calculate total revenue per user, then take the mean across users.

- If you select average revenue (not supported yet, but coming soon), we first compute each user's average (total revenue / number of events), then average those values across users.

Ratio

Ratio metrics—such as average order value—are not yet supported, but they're on the roadmap. These metrics allow you to divide one metric by a different, meaningful denominator. For example, instead of calculating average order value by dividing total revenue by the total number of users in the experiment (including those who didn't place an order), you would divide by the number of users who actually made a purchase. This provides a more accurate view of performance per relevant event or user segment.

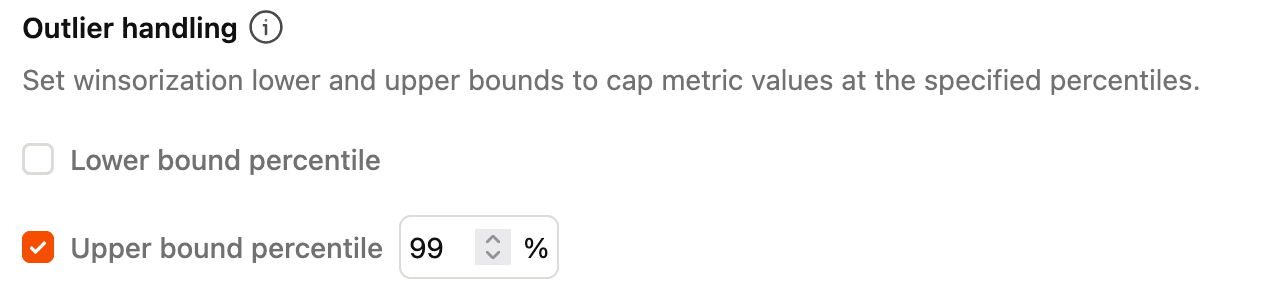

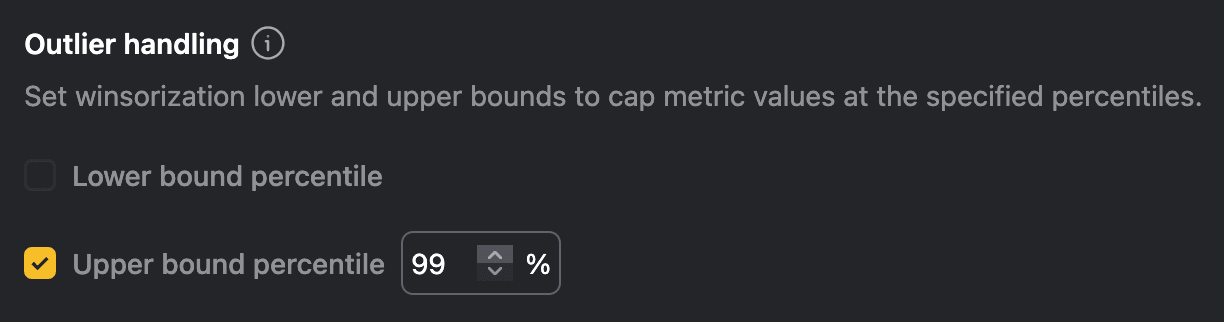

Outlier handling

You can limit the impact of extreme values by capping metric data at specified percentile thresholds using configurable lower and upper bounds. Metric data beyond those thresholds aren't included in experiment calculations.

This technique is commonly known as Winsorization and is especially useful for metrics with highly skewed distributions, such as revenue or total event counts, where a small subset of users can produce unusually high values that distort overall results.

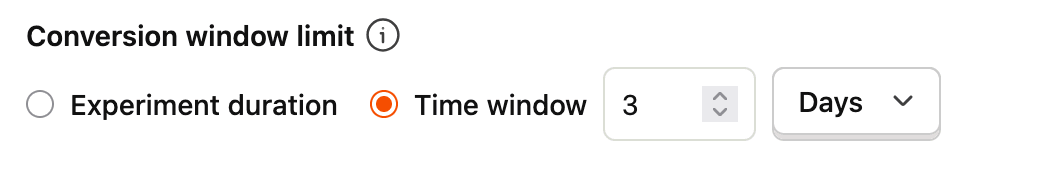

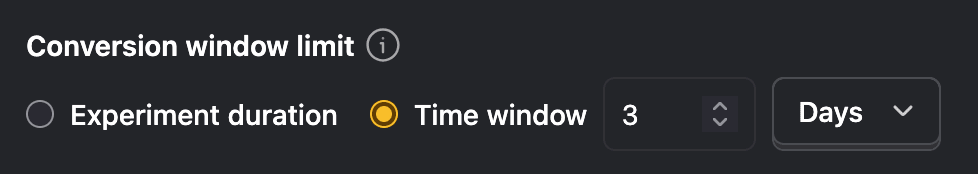

Conversion windows

Conversion windows restrict metric events to those that occur within a defined time window following a user's initial exposure to the experiment. Metric events outside of the conversion window aren't included in experiment calculations.

This ensures that only relevant, post-exposure behavior is included in the analysis.