LangChain LLM analytics installation

Contents

- 1

Install the PostHog SDK

RequiredSetting up analytics starts with installing the PostHog SDK for your language. LLM analytics works best with our Python and Node SDKs.

- 2

Install LangChain and OpenAI SDKs

RequiredInstall LangChain. The PostHog SDK instruments your LLM calls by wrapping LangChain. The PostHog SDK does not proxy your calls.

Proxy noteThese SDKs do not proxy your calls. They only fire off an async call to PostHog in the background to send the data. You can also use LLM analytics with other SDKs or our API, but you will need to capture the data in the right format. See the schema in the manual capture section for more details.

- 3

Initialize PostHog and LangChain

RequiredInitialize PostHog with your project token and host from your project settings, then pass it to the LangChain

CallbackHandlerwrapper. Optionally, you can provide a user distinct ID, trace ID, PostHog properties, groups, and privacy mode.Note: If you want to capture LLM events anonymously, don't pass a distinct ID to the

CallbackHandler. See our docs on anonymous vs identified events to learn more. - 4

Call LangChain

RequiredWhen you invoke your chain, pass the

callback_handlerin theconfigas part of yourcallbacks:PostHog automatically captures an

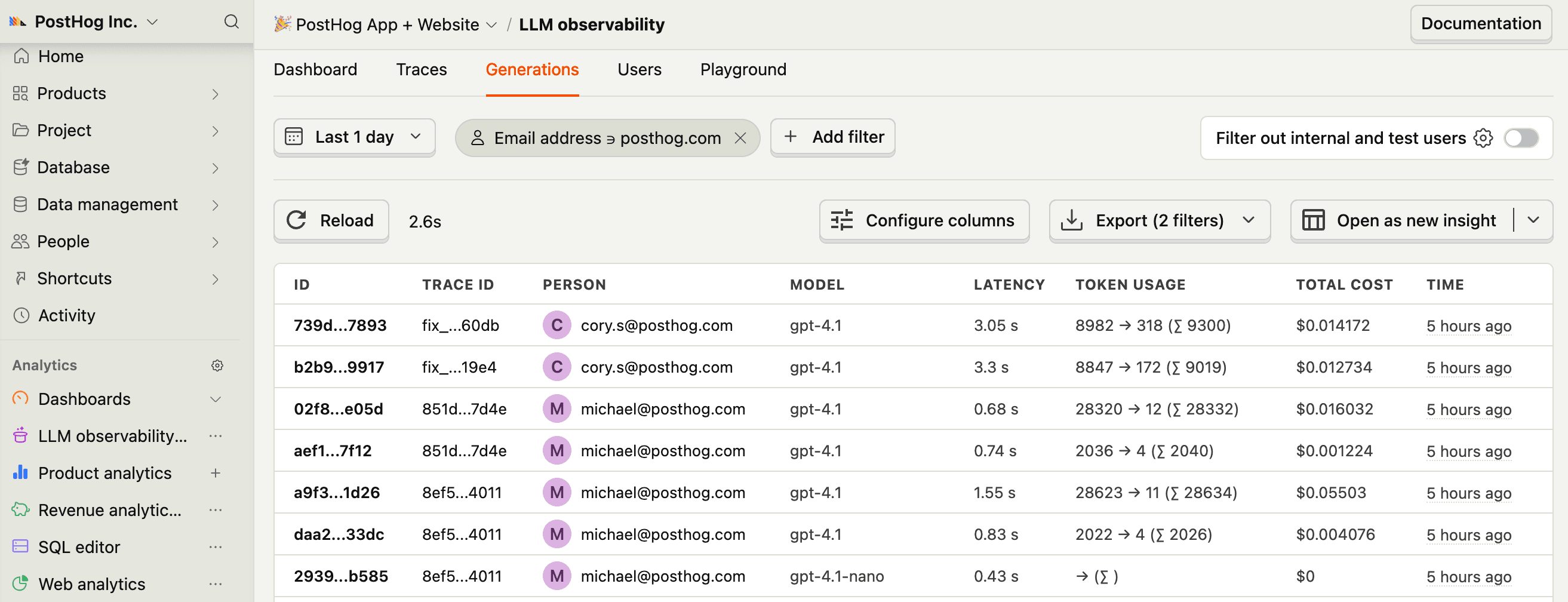

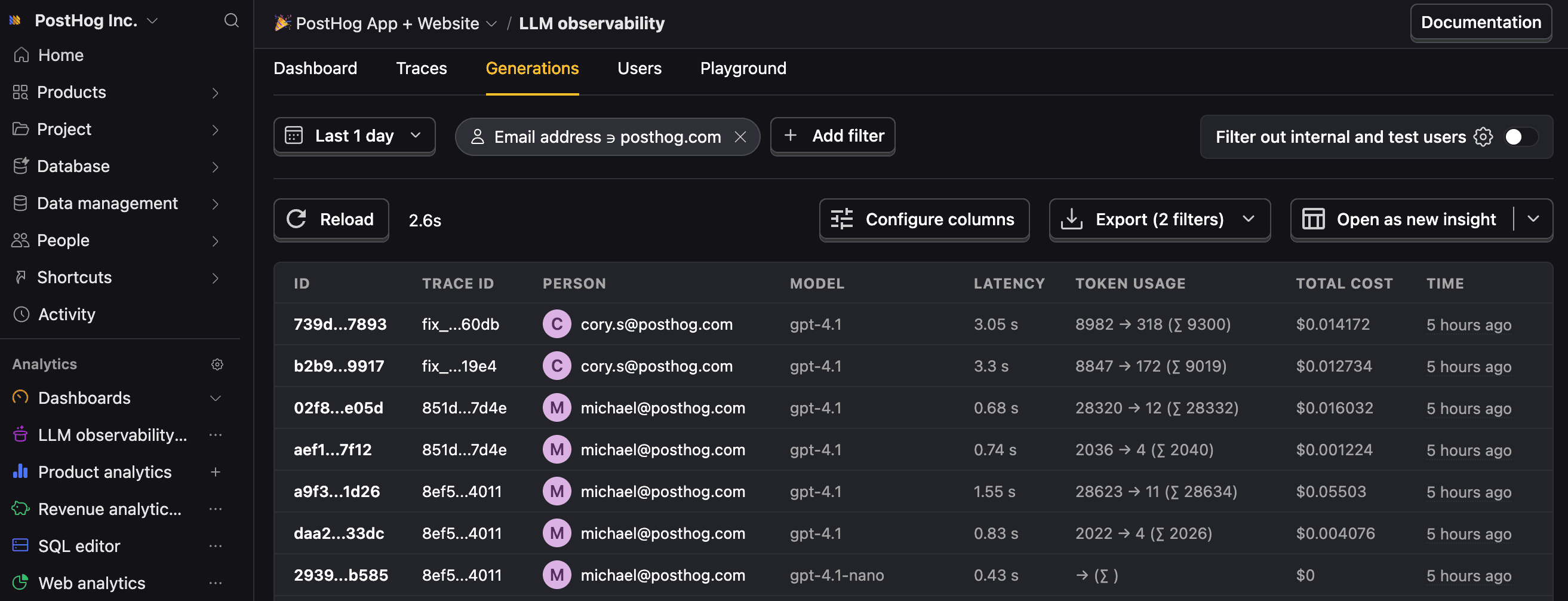

$ai_generationevent along with these properties:Property Description $ai_modelThe specific model, like gpt-5-miniorclaude-4-sonnet$ai_latencyThe latency of the LLM call in seconds $ai_time_to_first_tokenTime to first token in seconds (streaming only) $ai_toolsTools and functions available to the LLM $ai_inputList of messages sent to the LLM $ai_input_tokensThe number of tokens in the input (often found in response.usage) $ai_output_choicesList of response choices from the LLM $ai_output_tokensThe number of tokens in the output (often found in response.usage)$ai_total_cost_usdThe total cost in USD (input + output) [...] See full list of properties It also automatically creates a trace hierarchy based on how LangChain components are nested.

- 5

Next steps

RecommendedNow that you're capturing AI conversations, continue with the resources below to learn what else LLM Analytics enables within the PostHog platform.

Resource Description Basics Learn the basics of how LLM calls become events in PostHog. Generations Read about the $ai_generationevent and its properties.Traces Explore the trace hierarchy and how to use it to debug LLM calls. Spans Review spans and their role in representing individual operations. Anaylze LLM performance Learn how to create dashboards to analyze LLM performance.