Your modern data stack on quack DuckDB,

fully wired with PostHog AI

The most flexible modern data stack – built on DuckDB, designed to scale, and wired up with our omniscient AI

Your data needs flexibility, tooling, and actually-useful AI that's not stuck in a silo. We provide it all in a seamless data stack.

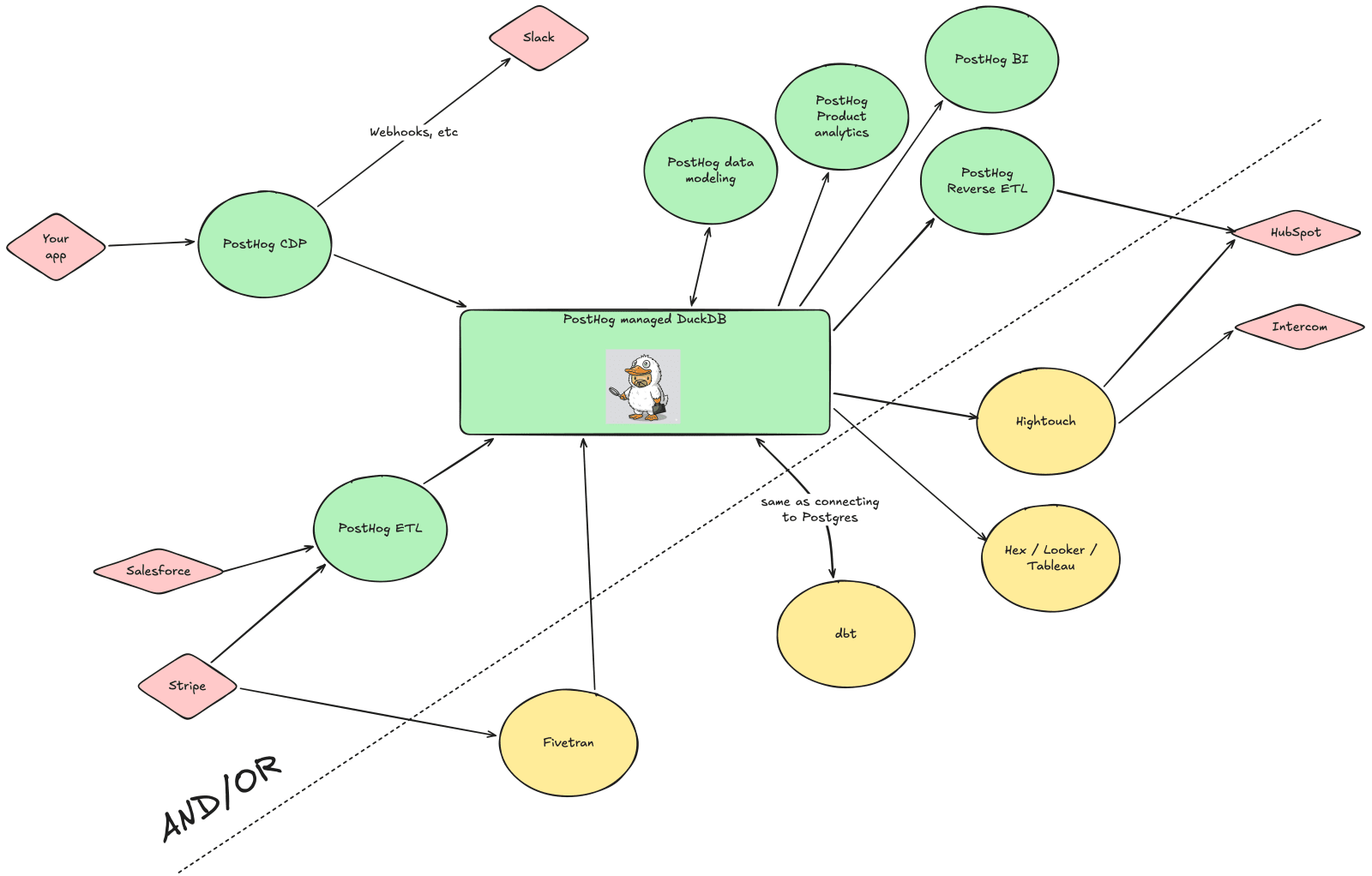

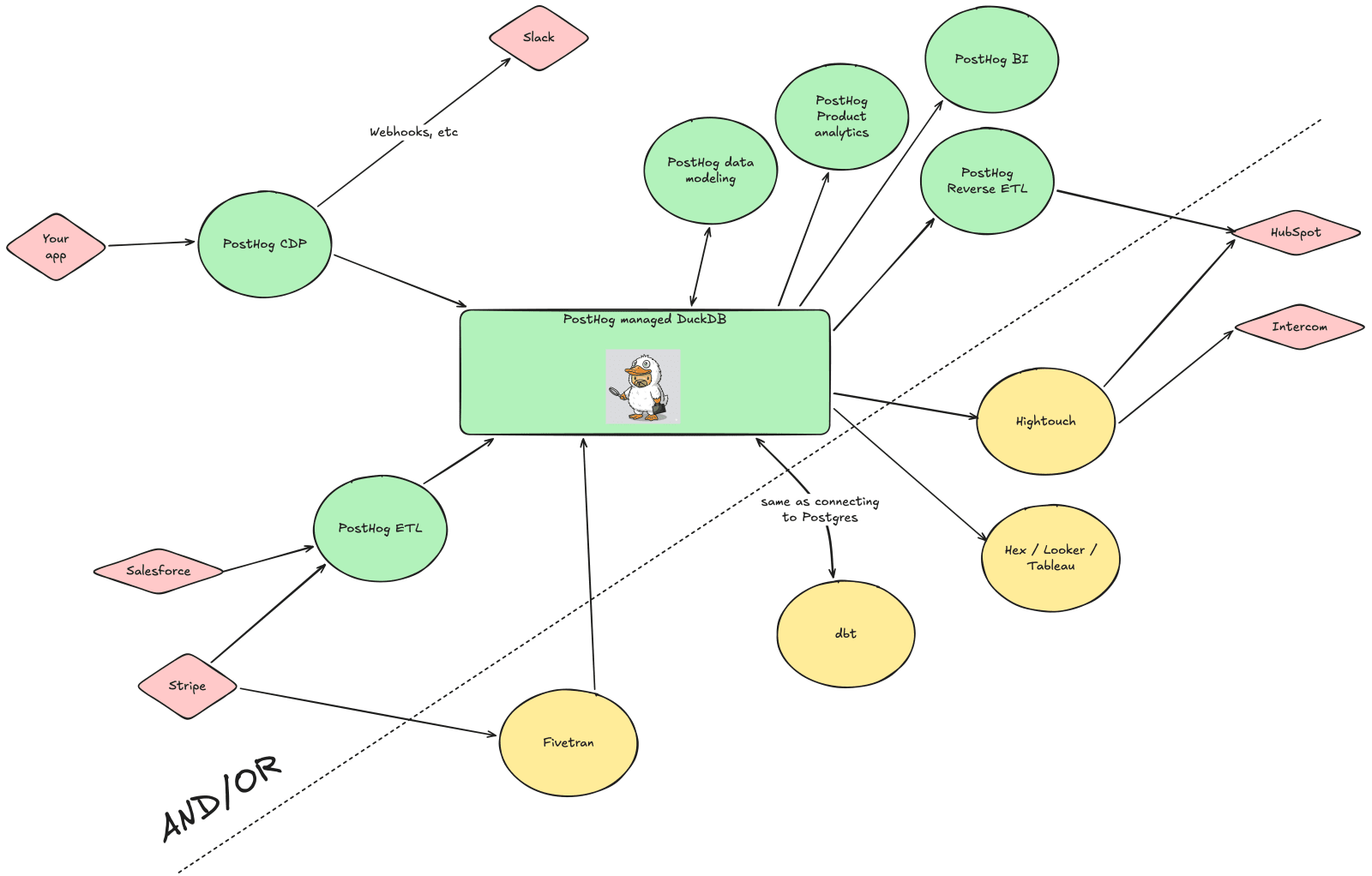

Bring your own tools like dbt and Hex to customize your experience, or use our built-in tooling to get started quickly. Anything that connects to Postgres can connect to your PostHog DuckDB warehouse using our Duckgres wrapper.

A modern data stack that's built for data teams and loved by product teams

PostHog's data infrastructure is built for data engineers who need to build a robust and flexible data stack to house all their business data.

And because the data is seamlessly connected to PostHog's product ecosystem, product teams get to continue using the tools they love - like product analytics, feature flags, and surveys - all powered up by your clean & modeled data. It's, quite literally, the best of both worlds.

Fully wired with our omnicient AI

With all your data in one place, PostHog AI becomes truly omnicient about your business. Generate SQL queries, model your data, and get insights about your users' behavior all using PostHog AI to work faster than ever before.

And better yet - PostHog AI allows everyone on your team to work with your modeled data. Product teams can ask questions and get insights without relying on the data team, which means data teams can focus on more strategic work.

You get the keys to the (data) castle

We give you the credentials to directly access your data store for complete flexibility, so you can bring whatever tooling fits your workflow. We also offer built-in tooling for CDP, data modeling, and more so you can get started quickly.

Data stack products

This is what we offer built-in - but feel free to bring your own tools if that's what you need.

- Managed DuckDB warehouseBeta

A single-tenant DuckDB warehouse that's automatically filled with your PostHog data - and anything else you sync in.

Perfect for: data engineers and analysts

- PostHog AI

Omnicient AI for your business. Generate SQL queries, model your data, and get insights about your users' behavior all using PostHog AI to work faster than ever before.

Perfect for: product teams, data engineers, and analysts

- Data sources & import (ELT)

Use our bulk import sources to get data into your warehouse, including data from databases, ad platforms, SaaS tools, and more.

Perfect for: product teams, data engineers, and analysts

- CDP

Stream data through our 60+ sources send data wherever you need it.

Perfect for: product teams, data engineers, and analysts

- Data ModelingBeta

Build modular, testable data tables that load in an instant.

Perfect for: data analysts and product teams

Not quite ready for: data engineers. We recommend bringing your favorite tools like DBT for now until our tooling is more mature.

- SQL editor

Explore your data with SQL, build business intelligence dashboards, and visualize key metrics.

Perfect for: product teams

Not quite ready for: data analysts. We recommend bringing your favorite tools like Hex for now until our tooling is more mature.

- Business intelligence (BI)

Visualize your data with interactive dashboards and ad-hoc analyses right in PostHog.

Perfect for: product teams

Not quite ready for: data analysts. We recommend bringing your favorite tools like Hex for now until our tooling is more mature.

- Reverse ETL & exportBeta

Get data out to the tools that run your business with reverse ETL & batch exports.

Perfect for: data engineers, product teams, and marketing teams

How our support engineers use the data warehouse

No captions available

You can use data in the PostHog warehouse for almost anything, including building custom insights and dashboards. One of the ways we use it ourselves is to track our support metrics, such as SLAs and first response times.  Abigail Richardson

Abigail Richardson Abigail Richardson writes up a summary based on this data and shares it with the exec team weekly -> and in the video above she explains how she gathers the data using SQL.

Abigail Richardson writes up a summary based on this data and shares it with the exec team weekly -> and in the video above she explains how she gathers the data using SQL.